Nov 11 2024

Generative AI (GenAI) is still in its infancy, but its impact is already being felt across industries. Over the past year, production applications leveraging GenAI have gone from proof-of-concept to delivering real-world value. According to the World Economic Forum, 75% of surveyed companies plan to adopt AI technologies by 2027. Leading cloud providers like AWS are making significant investments.

We are now at a tipping point where GenAI is not just an experiment but a cornerstone of operational systems. For businesses, this means unlocking new efficiencies and capabilities—especially in observability.

Applying GenAI to Observability

GenAI and observability are a perfect match, promising exponential value for developers and DevOps teams. When troubleshooting production issues, developers often sift through streams of data—logs, metrics, traces—to piece together the root cause. This process can take minutes, hours, or even days, depending on the complexity of the issue.

What if this analysis, which typically demands hours of human effort, could be accomplished in seconds? That’s the transformative potential of GenAI in observability. By drastically reducing Mean Time to Resolution (MTTR), developers can prevent costly downtime and focus on delivering innovation.

The Lumigo AI Advantage

The effectiveness of any AI model depends on the quality of data it analyzes. Lumigo’s unique advantage lies in the completeness and accuracy of its data:

- Payload Data: Lumigo captures request and response payloads, offering developers an end-to-end view of system behavior

- Trace completeness—a critical factor in identifying root causes upstream in complex systems, trace completeness ensures the trace remains intact to uncover the root cause of any error.

- Correlated Context: Lumigo seamlessly correlates traces, logs, and metrics, presenting all relevant data in context. This enables both human developers and AI models to quickly and accurately diagnose issues.

By leveraging this rich dataset, Lumigo’s AI provides unparalleled insights. It doesn’t just point to problems; it helps developers understand the root cause and suggests actionable fixes—all in seconds. For organizations where every minute of downtime equates to millions in lost revenue, these capabilities are game-changing.

Empowering Developers of All Levels

Not all developers are seasoned experts, and relying on senior developers for every issue is unsustainable. Lumigo’s AI acts as a co-pilot, empowering developers of all experience levels to troubleshoot effectively. By democratizing access to expert-level insights, Lumigo frees up senior developers to focus on innovation rather than firefighting.

This not only improves team efficiency but also reduces burnout and attrition in R&D and SRE teams.

AI Observability in Action

Imagine an observability tool that has been deployed by a food delivery tool. What if it could detect an issue and go all the way to auto-remediation? That’s what Lumigo is creating. In this example, the AI:

- Detects an issue, such as delivery orders not being processed in a food service system.

- Sends a Slack notification with the root cause, e.g., an API returning an error due to incorrect database formatting.

- Provides contextual information, such as who made recent code changes and when.

- Suggests a code fix, allowing developers to review, commit, and resolve the issue—all within minutes.

That’s what Lumigo’s AI-powered observability delivers.

Improving Model Accuracy

Building an effective AI model requires continuous iteration and refinement. Here’s how Lumigo enhanced the accuracy of its AI:

Prompting Improvements: The process began with crafting prompts for the model to solve issues. Over time, these prompts were improved by giving the model specific roles, explaining the data being sent, and framing it as if communicating with a human. This approach enhanced the model’s reasoning capabilities.

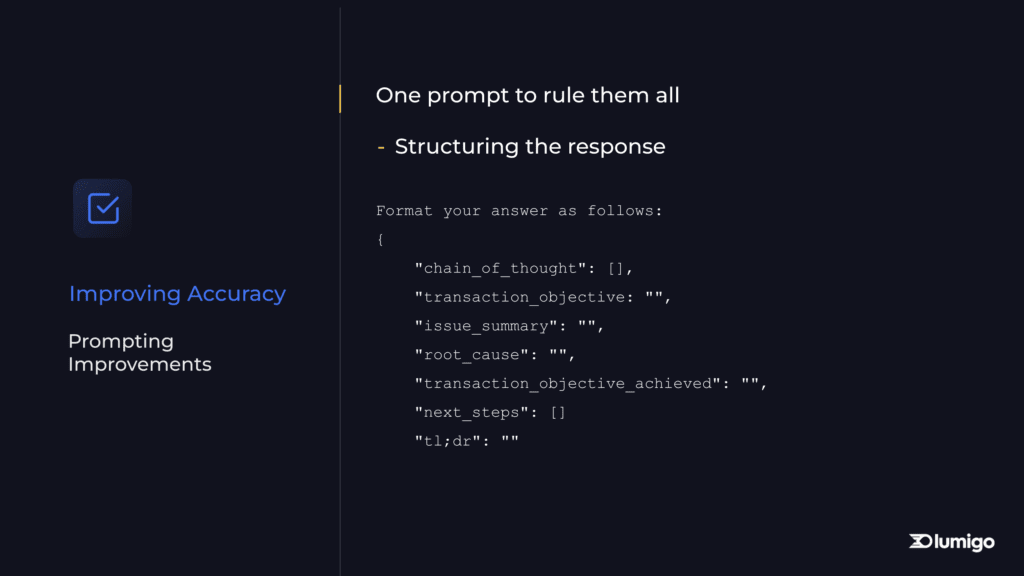

Structured Responses: Lumigo formatted model responses in a computer-readable structure, such as JSON. By clearly defining what the model needed to look for and how to present its findings, response accuracy improved significantly.

Chain-of-Thought Reasoning: Asking the model to outline its reasoning, similar to a student solving a math problem step-by-step, not only enhanced response quality but also provided insights into how the model interpreted data. This allowed the team to identify areas where data presentation could be improved.

Data Cleansing: Redundant data points and duplicates were removed to streamline large structures. This made the data compact and easier for the model to process effectively.

Human-Readable: Lumigo’s trace structure was communicated concisely for clarity. Initially optimized for computers, the traces were restructured to be more intuitive for both humans and AI models. By representing resources, parent node IDs, and child node IDs in an easily interpretable format, the model’s performance improved.

Agent-Based Expertise: When further improvements were needed, Lumigo implemented specialized AI agents for different types of issues. These agents became domain experts, balancing specificity with the flexibility to handle generic problems. By structuring input in concise, logical chunks, the agents processed information with greater accuracy.

Building the Future with AI Agents

Developing AI for observability isn’t without challenges. Lumigo’s approach has involved:

Iterative Model Improvements: Enhancing prompt clarity, structuring responses for better comprehension, and leveraging “chain-of-thought” reasoning to improve accuracy.

Data Optimization: Cleaning and streamlining data structures to ensure models operate efficiently and effectively.

Agent-Based Expertise: Implementing specialized AI agents tailored for different types of issues. These agents break down problems into logical steps, invoking specific tools and APIs to diagnose and resolve issues dynamically.

For example, if an issue arises in a Lambda function, the AI agent might:

- Analyze the overall service graph to identify the problematic function.

- Retrieve and interpret relevant logs.

- Trace upstream dependencies to pinpoint the root cause.

- Suggest actionable fixes, stopping once the issue is resolved.

This modular, dynamic approach allows Lumigo’s AI to address a broad spectrum of issues efficiently.

Watch our on-demand webinar with AWS.

We’ve scratched the surface, but there’s a lot more to talk about. In October, we discussed these concepts and more in detail with AWS Data Scientist Shreyas Subramanian. Watch the discussion on-demand.

What’s Next?

Lumigo’s AI is powered by cutting-edge technologies like Amazon Bedrock, enabling it to orchestrate multistep tasks securely and intelligently. By leveraging advanced models and agents, Lumigo continues to push the boundaries of what’s possible in observability.

As we refine these capabilities, our mission remains clear: to reduce MTTR and democratize troubleshooting for developers everywhere. With tools like Lumigo Copilot, we’re not just imagining the future of observability—we’re building it. Learn more about Lumigo Copilot Beta.