kubectl restart pod

- Topics

kubectl restart pod: 4 Ways to Restart Your Kubernetes Pods

What Is kubectl restart pod?

When managing Kubernetes resources, the kubectl command-line tool is useful for quickly obtaining information and making modifications. kubectl restart pod is not a built-in command in kubectl. However, you can achieve the same result in several ways, for example, by creating a new pod with the same configuration and deleting the original pod.

Thus, while there is no single command kubectl restart pod , it is possible to combine commands such as kubectl create -f <pod-config-file.yaml> and kubectl delete pod <pod-name>to restart an identical pod.

This is part of a series of articles about Kubernetes troubleshooting.

In this article

Understanding the Pod Lifecycle

In Kubernetes, a pod is the smallest deployable unit that represents a single instance of a containerized application. Understanding the lifecycle of a pod is crucial for managing and troubleshooting your applications running on Kubernetes. The lifecycle of a pod can be divided into five stages:

- Pending: When a pod is created, it enters the pending state, which means that the Kubernetes scheduler is looking for a suitable node to deploy the pod. During this stage, the container image is being pulled from the container registry and any volumes attached to the pod are being mounted. Once all of the dependencies are met, the pod moves to the running state.

- Running: When a pod is scheduled to a node, it moves to the running state, which means that the container(s) in the pod are started and running. The pod will remain in this state until it is terminated.

- Succeeded: A pod enters the succeeded state when all the containers in the pod have completed their execution and exited successfully. This state is used for batch jobs or one-time jobs that don’t require continuous running.

- Failed: If any of the containers in the pod terminate with a non-zero exit code, the pod enters the Failed state. This state indicates that the pod has failed to complete its intended task.

- Unknown: In some cases, Kubernetes may not be able to determine the status of a pod. For example, if the container runtime is unavailable or there is a communication problem with the Kubernetes API server, the pod may enter the unknown state.

It’s important to note that a Pod can move between these states multiple times during its lifecycle. For example, if a container in a running pod crashes, the pod will move to the failed state, and Kubernetes will try to restart the container to get the pod back into the running state.

Why You Might Want to Restart a Pod

It is not recommended to restart pods unless strictly necessary. However, there are several reasons why you might need to restart a pod in Kubernetes. For example:

- To apply configuration changes: If you make changes to the pod’s configuration, such as updating environment variables, volume mounts, or image version, you need to restart the pod to apply these changes.

- To recover from failures: If a container in the pod crashes or becomes unresponsive, restarting the pod may be necessary to recover the application and restore its functionality.

- To clear resource constraints: If a container in the pod is consuming too much memory or CPU, restarting the pod may free up resources and improve the application’s performance.

4 Ways to Restart Kubernetes Pods Using kubectl

Kubernetes does not have an equivalent to the docker restart command, which is often used to restart containers in Docker processes. Thus, Kubernetes requires a combination of separate commands to eliminate and recreate pods. Here are some common methods to restart a pod using kubectl commands.

1. Deleting the Pod

This is the simplest way to restart a pod. Kubernetes works in a declarative manner, so a conflict arises when a pod’s API object is deleted. This is because Kubernetes expects there to be an object if it is declared – when it finds that this object is missing, it automatically recreates a new one to replace it. You can delete the pod using the kubectl delete pod <pod-name> command and Kubernetes will automatically create a new pod with the same configuration.

2. Scaling Replicas

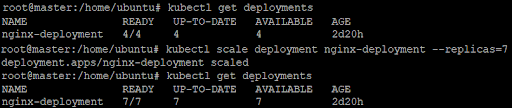

You can use the kubectl scale command to update the number of replicas. If you set the number of replicas to 0, Kubernetes will terminate the existing replica pods. When you increase the number of replicas, Kubernetes will create new pods. Note that the new replicas will not have the same names as the old ones. Here’s an example command to scale a deployment:

kubectl scale deployment <deployment-name> --replicas=<new-replica-count>

The following screenshot displays the current and updated number of replicas after the above command is executed:

This will update the specified deployment to have the new number of replicas, and Kubernetes will create new pods. Note that this method will also result in a brief downtime for your application while the new pods are being created.

3. Updating a Pod’s Environment Variables

Another approach to restarting a pod is to update its environment variables. This will cause the pod to automatically restart to apply the changes.

If you need to update environment variables in your pod’s configuration, you can use the kubectl set env command. Here is an example command to update an environment variable:

kubectl set env pod/<pod-name> <env-name>=<new-value>

In the following example, we will update the environment variable of Storage directory to /local. This will update all pods for the given deployment.

This will update the specified environment variable in the pod’s configuration, and cause the pod to automatically restart.

4. Using Rollout Restart

You can use the kubectl rollout restart command to trigger a rolling update of the deployment. This will cause Kubernetes to create new pods with the updated configuration until all the pods are new. For example:

kubectl rollout restart deployment <deployment-name>

![]()

This will trigger a rolling update of the specified deployment. Kubernetes will create new pods with the updated configuration, one at a time, to avoid downtime.

Kubernetes Troubleshooting with Lumigo

Lumigo is a troubleshooting platform, purpose-built for microservice-based applications. Developers using Kubernetes to orchestrate their containerized applications can use Lumigo to monitor, trace and troubleshoot issues fast. Deployed with zero-code changes and automated in one-click, Lumigo stitches together every interaction between micro and managed service into end-to-end stack traces. These traces, served alongside request payload data, give developers complete visibility into their container environments. Using Lumigo, developers get:

- End-to-end virtual stack traces across every micro and managed service that makes up a serverless application, in context

- API visibility that makes all the data passed between services available and accessible, making it possible to perform root cause analysis without digging through logs

- Distributed tracing that is deployed with no code and automated in one click

- Unified platform to explore and query across microservices, see a real-time view of applications, and optimize performance

To try Lumigo for Kubernetes, check out our Kubernetes operator on GitHub.

Docs

Docs Blog

Blog Guides

Guides