May 02 2023

This post is part of an ongoing series about troubleshooting common issues with microservice-based applications. Read the previous one on intermittent failure.

Queues are an essential component of many applications, enabling asynchronous processing of tasks and messages. However, queues can become a bottleneck if they don’t drain fast enough, causing delays, increasing costs, and reducing the overall reliability of the system. This is a common issue faced by many serverless applications that rely on AWS Lambda functions and containers to process messages from AWS Simple Queue Service (SQS).

In this scenario, a large number of messages can accumulate in the queue, triggering CloudWatch alerts and indicating potential issues with the application. In this article, we will focus on how to address the problem of queues that don’t drain fast enough in the context of Lambda functions and containers that use SQS message queueing on AWS.

Let’s start by setting the scene and laying out a scenario where you have built an application on AWS Lambda and utilizes AWS Simple Queue Service (SQS) message queueing that is being read by a Node.js container. Let’s assume that this application is designed to process a large number of messages but you begin to experience a problem when the message queue is not decreasing in size, or it may in fact increase in size over time and as a result, AWS Cloud Watch alerts are being triggered by the size of the queue.

Background information on Lambda & SQS

Well, let’s start by asking ourselves, what is AWS Lambda? It is a serverless, event-driven compute service offered by AWS, allowing you to run code for a myriad of applications and backend services without the need to provision or manage the servers. Much like most Severvless services, you only pay for the compute time you use when the code is running and nothing more. They have a default timeout of 15 minutes so they are designed for running short and snappy code. Go and checkout this Lumigo blog for more information and scenarios on AWS Lambda Timeouts.

AWS SQS is a fully managed message queuing service that allows you to decouple and scale microservices, distributed systems, and serverless applications by asynchronously sending between the different components. AWS SQS has two queue types, Standard and First In First Out (FIFO). Standard queues are best for applications that do not require strict ordering of messages and FIFO queues are best for applications that do require the strict ordering of messages.

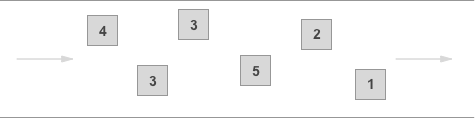

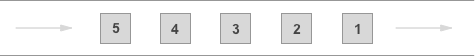

Standard queue:

First In First Out (FIFO) queue:

For a full breakdown of the two AWS SQS queue types, you can find the documentation here: https://aws.amazon.com/sqs/features/.

The Problem

Now breaking down the problem, the issue of repeated failures in processing SQS messages can be down to a number of reasons. Some of them being incorrect message formats, invalid parameters being passed through, network timeouts or general bugs in deployed code. In AWS SQS, when a message within a batch fails to process, by default all messages are sent back to the original queue to be retired. However, this can be adjusted with some changes to its configuration. When this happens it can be flagged and picked up by monitoring tools such as AWS CloudWatch.

AWS CloudWatch is a monitoring and logging service that allows you to collect and track metrics, collect and monitor log files, set alarms, and automatically react to changes in your AWS resources. Alarms are trigger induced and with this scenario, if an alarm was set to trigger when the queue size is greater than a certain number, then this would send an alert to the CloudWatch dashboard and notify the user through email, SMS or a webhook.

With this being the case, having several or repeated failures in SQS message processing with alarms being triggered, can result in a number of issues for the application. Not only could this decrease throughput of the application, but will also induce higher costs and reduce the overall reliability of the system. With a large queue size you are also going to see the adverse effects of components downstream having hindered performance. For example, the Node.js container that is reading from this queue could create further delays or timeouts when processing the data, especially if it is not receiving all of the messages in time.

How Lumigo Can Help

Well, with Lumigo, you are in the right place to find out how to debug and help solve this problem. Lumigo is a cloud-native observability platform that helps you visualise and debug your applications at scale. With the issue of this scenario, Lumigo can help you in a number of ways.

- Distributed tracing – One of the key drivers behind Lumigo is the distributed tracing capabilities. This will allow you to follow the path of the message through the system from the AWS SQS queue all the way through and into the Node.js container and beyond. Through this you can identify how these errors are occurring and help you to replicate them in a controlled environment.

- Debug code errors – With the detailed error analysis that Lumigo provides, you will be able to see the call stack, the exact location of the error in the system and how it may be being thrown. This helps to alleviate the manual process of logging and debugging the code, helping to optimise yourself and other developers in the team.

- Monitor key metrics you set – Getting into the knitty-gritty of your system, Lumigo can also help to monitor strategic key metrics within your infrastructure such as invocation counts, execution times and message throughput. By allowing you to see all of this and more, it gives you a holistic view of how your system is working from the inside-out.

- Analyse the logs – In Lumigo you can aggregate logs from Lambda functions and other AWS services allowing you to quickly identify bottlenecks and failure points. Having this available can help with this scenario by allowing you to see the logs from the Lambda function and the Node.js container and see where the errors are being thrown from.

Overall Lumigo has a number of great features to help with this scenario, so now let’s look at some steps to see how to implement Lumigo into some code.

We can use the following code in a Node.js Lambda Function to use Lumigo and gain an insight into what is happening with an AWS SQS service.

//AWS sdk import

const AWS = require('aws-sdk');

// lumigo tracer import

const lumigo = require('@lumigo/tracer')({ token: 'YOUR-TOKEN-HERE' })

// handler function

const myHandler = async (event, context, callback) => {

const sqs = new AWS.SQS();

const queueUrl = 'https://sqs.us-east-1.amazonaws.com/123456789012/YOUR-QUEUE';

// read the messages from the queue

const data = await sqs.receiveMessage({

QueueUrl: queueUrl,

MaxNumberOfMessages: 10,

VisibilityTimeout: 20,

WaitTimeSeconds: 0

}).promise();

// process the messages

for (const message of data.Messages) {

console.log(message.Body);

}

// delete the messages from the queue

const deleteParams = {

QueueUrl: queueUrl,

Entries: []

};

for (const message of data.Messages) {

deleteParams.Entries.push({

Id: message.MessageId,

ReceiptHandle: message.ReceiptHandle

});

}

await sqs.deleteMessageBatch(deleteParams).promise();

}

exports.handler = lumigo.trace(myHandler)

Breaking Down The Code:

Step 1: Import the AWS SDK, Lumigo tracer and populates the Lumigo API token from an environment variable.

Step 2: The Lambda handler function is declared taking in 3 parameters (`event`, `context` and `callback`) and this is responsible for the processing of the messages and the SQS queue.

Step 3: It creates an instance of the SQS client using the AWS SDK and sets its parameters for the queue size, wait time, etc. At this point there would be CloudWatch alerts set to trigger based on these metric sizes, for example, in our origins scenario if the queue was getting too big or reaches a certain size, it would trigger an alert.

Step 4: For the purpose of this blog, the code is only printing the message, but at this point is it processing the queue messages and deleting them from the queue once completed. If it fails then it can either throw an error or retry as discussed earlier in the blog.

Step 5: At the end it exports the handler function with Lumigo tracing enabled. This essentially wraps the original handler function and adds the tracing and observability capabilities that Lumigo provides.

Overall, this simple block of code demonstrates how to integrate Lumigo tracing into a Node.js Lambda function allowing you to gain an insight into the behaviour of the code and message processing. If you want to give this a go yourself, the full documentation of this example can be found here: https://github.com/lumigo-io/lumigo-node.

Don’t Let Queues Drain your App Usability

In this blog post, we’ve explored a common scenario in Serverless computing where repeated failures in the processing of AWS SQS queues prevent the queue from getting smaller, causing CloudWatch alerts to trigger. We have also looked at some of Lumigo’s features and how they can help give deep visibility into the Serverless application to troubleshoot and understand how and where the failures are happening. Alongside that, we have also looked at a coded example and how simple it is to get started and implement Lumigo tracing capabilities into your Lambda functions, allowing you to collect performance metrics and other data so you can quickly pinpoint issues when and if they arise.

Queues that don’t drain fast enough can be a problem not just for AWS Lambda functions, but also for containers and other serverless applications that rely on message queueing to process tasks and messages. As these applications continue to grow in popularity, it becomes increasingly important for developers to be aware of the challenges associated with message queueing and take proactive steps to mitigate potential issues. By implementing the right tools and approach, developers can help ensure that queues are efficient, reliable, and scalable, enabling them to build robust and resilient applications that meet the needs of their users.