Oct 16 2019

Serverless Hero Yan Cui explains when and why you should use AWS API Gateway service proxies, and introduces an open source tool to make it easy to implement.

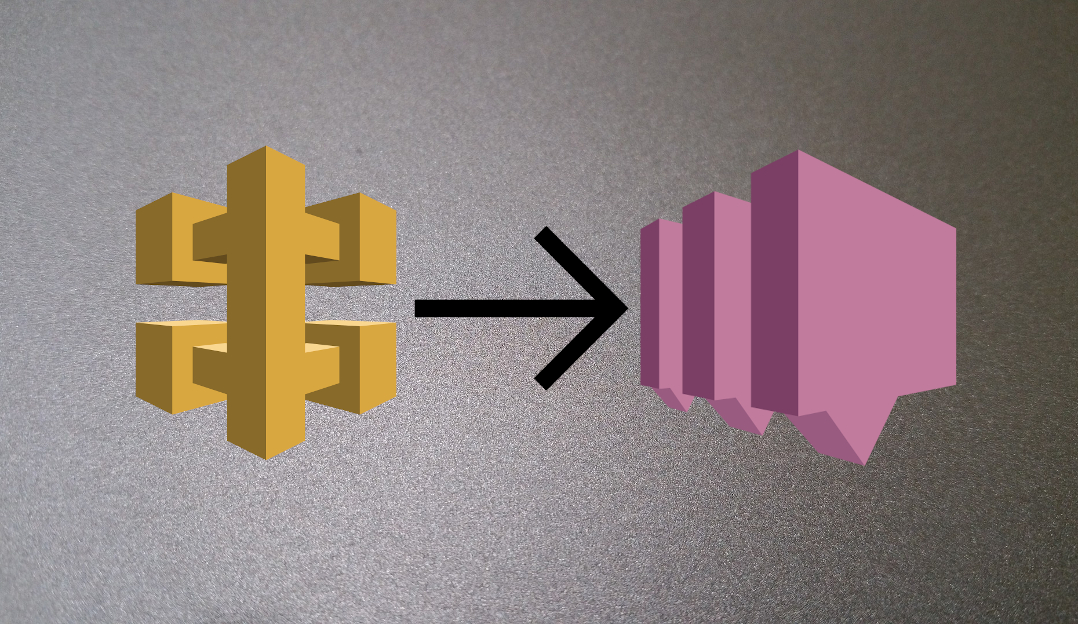

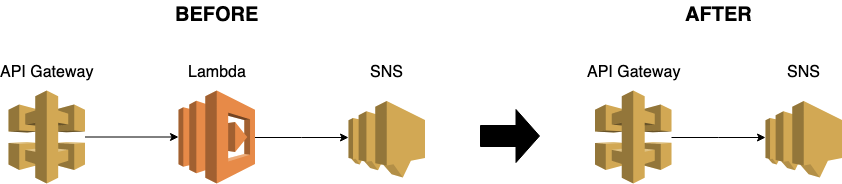

One of the very powerful and yet often under-utilized features of API Gateway is its ability to integrate directly with other AWS services. For example, you can connect API Gateway directly to an SNS topic without needing a Lambda function in the middle. Or to S3, or any number of AWS services.

In this post, let’s talk about why it’s useful, when you should consider it and how to do it.

Why?

The main (and probably only) reason is to remove Lambda from the equation.

In the process, we also remove all the limitations and overhead that comes with Lambda.

Cold starts

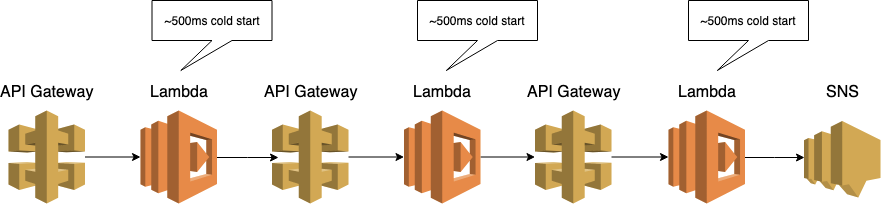

If you’re using Node.js, Python or Golang, you can reduce the cold start duration down to ~500ms with a few optimizations. It should mean that even the cold starts are within the acceptable latency ranges for most applications.

However, some applications have more strict latency requirements. Or maybe if the API is part of a call chain that involves multiple Lambda functions. In which case the cold starts can stack up and become problematic as a whole, even if individually they’re fine.

Cost

Having a Lambda function in the mix means you also have to pay for the Lambda invocations as well as associated costs such as CloudWatch Logs.

While this should not be the primary reason for you to use AWS API Gateway service proxies, it’s worth considering. Compared with everything else, Lambda is usually a small part of the overall cost. API Gateway, for instance, is usually more expensive than Lambda. And even CloudWatch Logs costs would often eclipse Lambda costs in production.

Limits

Lambda also has two concurrency limits. There’s the total number of concurrent executions of ALL functions in the region. This is a soft limit, so you can raise it with a simple support ticket.

However, there is also a hard limit on how quickly you’re able to increase the number of concurrent executions after the initial burst limit. This limit stands at 500 per minute. It means, to reach a peak concurrency of 10k it can take you up to 20 minutes.

For APIs that needs to happen large bursts of traffic, this is a show stopper. Because these limits are regional, it also means that one spiky API can have a large impact on everything else in the region. When this spiky API consumes all the available concurrency in the region, other function invocations will be throttled.

However, it’s worth remembering that even without Lambda, API Gateway also has its own set of regional throughput limits. The default regional limit for AWS API Gateway is 10k requests/s. It is also a soft limit and can be raised through a support ticket.

When?

You should consider this approach when:

- Your Lambda function does absolutely nothing other than calling another AWS service and returning the response.

- You are concerned about the cold start latency overhead, or hitting those pesky concurrency limits.

Before you reach any hasty conclusions, keep in mind that your function could be fulfilling a number of non-function requirements too. For example:

- By using the AWS SDK, the function is applying retries with exponential delay on retriable errors.

- It might be logging useful contextual information about “why” you’re calling the AWS service in question. These could include correlation IDs and other information that can only be extracted from the request payload.

- It might be handling errors and writing useful log messages to help you debug the problem later.

- It might apply fallbacks when the AWS service fails. For example, if a DynamoDB read fails after the built-in retries then we can return a default value instead of a 500 HTTP response.

- It might be injecting failures into the requests to the AWS service as part of chaos engineering practices. This helps us identify weaknesses in our code and proactively build resilience against different failure modes. Which takes us back to the point about error handling in our Lambda function.

How?

So assuming that you have made a conscious decision on the trade-offs of having a Lambda function behind API Gateway. How do you actually let API Gateway talk to another AWS service directly?

Let’s also assume that “configure it in the AWS console” is not a suitable solution here. Because we want repeatable infrastructure and infrastructure as code.

I suspect one of the reasons why service proxies are not used more frequently is that they are just clumsy to implement:

- You have to navigate through a myriad of IAM permissions, which sometimes do not have a 1-to-1 mapping with the operation.

- You often have to write custom VTL templates, which is definitely not everybody’s cup of tea!

- It’s difficult to test them. The api-gateway-mapping-template project is a godsend but has not been updated for years. Most folks would write the template in the API Gateway console and test it there before copying the template code out.

- Depending on the service you want to connect to, there are different conventions you have to adhere to. These secret handshakes/incantations are badly documented and a test of one’s googling prowess.

Fortunately, these are the kind of problems that tooling can solve. And I have been working with Takahiro Horike and Erez Rokah on just the thing for you!

If you’re using the Serverless framework, then the serverless-apigateway-service-proxy plugin makes creating service proxies really easy. Right now, the plugin supports Kinesis Streams, SQS, SNS and S3. And we’re actively working on supporting DynamoDB as well.

To configure a service proxy for SQS, first install the plugin by running serverless plugin install -n serverless-apigateway-service-proxy.

In your serverless.yml, add (or append to an existing) custom section:

custom:

apiGatewayServiceProxies:

- sqs:

path: /sqs

method: post

queueName:

'Fn::GetAtt': ['SQSQueue', 'QueueName']And that’s it! Now you have an endpoint that will forward the POST body as a message to the designated SQS queue. The plugin ensures that AWS API Gateway has the right permission to publish messages to the designated queue, and nothing else.

Optionally, you can also enable CORS and add authorization with AWS_IAM, Cognito as well as custom authorizer functions.

Where it makes sense, the plugin also lets you customize the request template. That is if you’re not afraid to get your hands dirty with VTL!

We hope this plugin makes it easy for you to create API Gateway service proxies. And that you take advantage of this wonderful feature where appropriate. If you want us to add support for other services then please submit a feature request here.

P.S. we already have support for Step Functions. Which is baked into the serverless-step-functions plugin, another joint venture between me and Horike-san!