Sep 29 2021

https://lumigo.io/learn/aws-lambda-cost-guide/AWS just announced support for AWS Lambda functions powered by AWS Graviton2 processors. These are 64-bit Arm-based processors that are custom built by AWS and offer a better price to performance ratio.

In this post, let me take through what we have learnt about this new option and what it means for you.

How it works

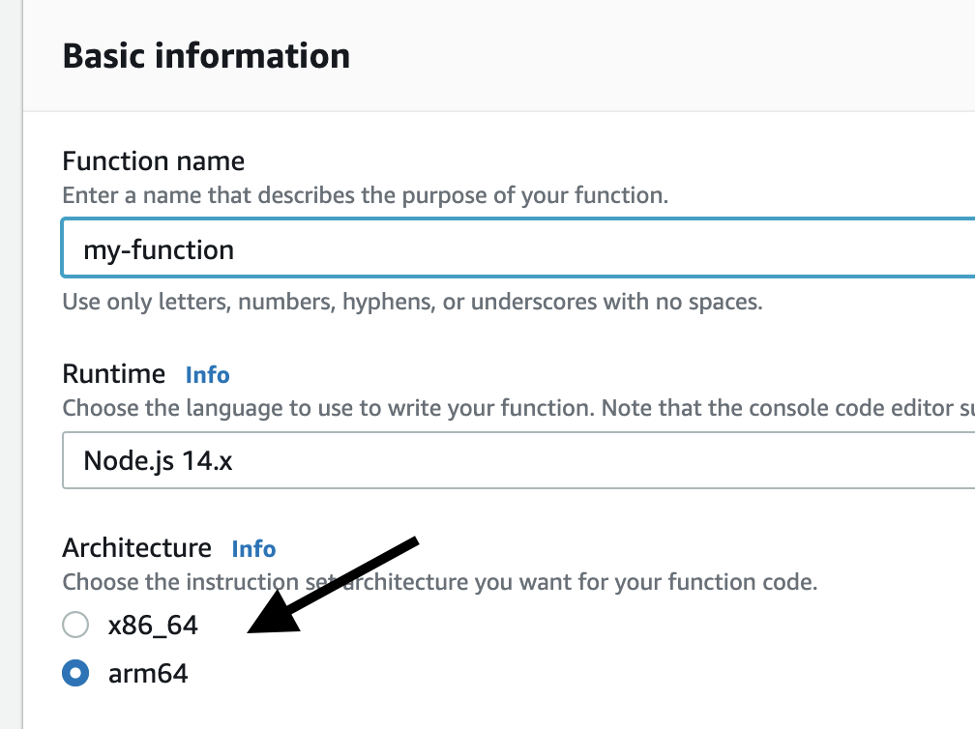

When you create a new Lambda function, you now have the option to select whether your code runs on x86_64 or arm64 architectures.

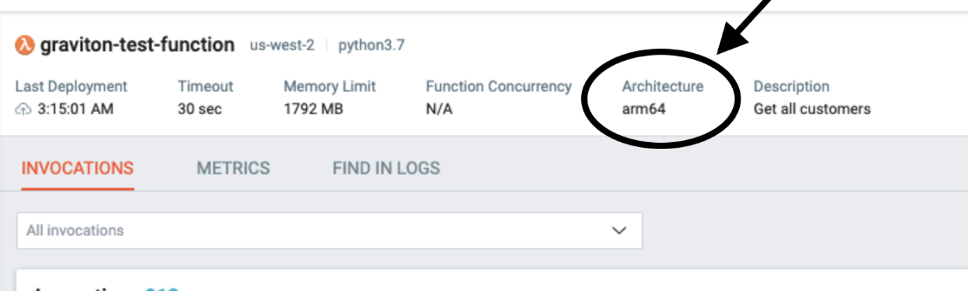

As an AWS launch partner for this new feature, I’m excited to tell you that Lumigo already supports Graviton2 Lambda functions! You can see what architecture a function runs on in the Lumigo console.

How does it compare to x86 functions?

With Lambda, CPU and Network bandwidth is allocated proportionally based on the amount of memory you allocate for a function and the Graviton2 processor is supposed to offer better performance at the same price point.

Graviton2-based Lambda functions are 20% cheaper than x86-based Lambda functions. According to AWS, Graviton2-based functions also performed better than x86-based functions on a variety of workloads. According to AWS’s official announcement post, Graviton2-based Lambda functions offer up to 34% better price-performance improvement.

However, when it comes to performance, it’s essential to benchmark your workload directly. You will likely see very different results depending on the workloads you test. If your workload performs as well on Graviton2 processors as it does on x86 processors then hey, you just saved yourself 20% on cost! Even if it performs slightly worse on Graviton2 processors then it might still be worthwhile making the switch given the significant cost savings.

What does this mean for you?

Graviton2 is a good fit for a wide range of different workloads and lets you make smart trade-offs for different situations.

For example, a Lambda function that handles API requests and calls DynamoDB or other AWS services is well suited for Graviton2. These IO-heavy functions typically under-utilize their CPU resources and running them on the Graviton2 processor gives you a better price without sacrificing user experience.

Similarly, a Lambda function that trains Machine Learning (ML) models can run with thousands of concurrent executions to improve throughput. A 10GB Lambda function gives you 6 vCPUs per concurrent execution, at 3000 concurrent executions this equates to a whopping 30000 vCPUs. This is akin to having a personal supercomputer! This workload is CPU-intensive but it’s not time-sensitive, and given the huge number of vCPUs involved, a better price-to-performance ratio can make a big difference to the cost of running this workload.

On the other hand, if you encode customer-uploaded videos with Lambda, then the workload is both CPU intensive and time-sensitive (the customer is waiting for a response). To get the best performance possible you might go with x86 even if it’s less cost-effective.

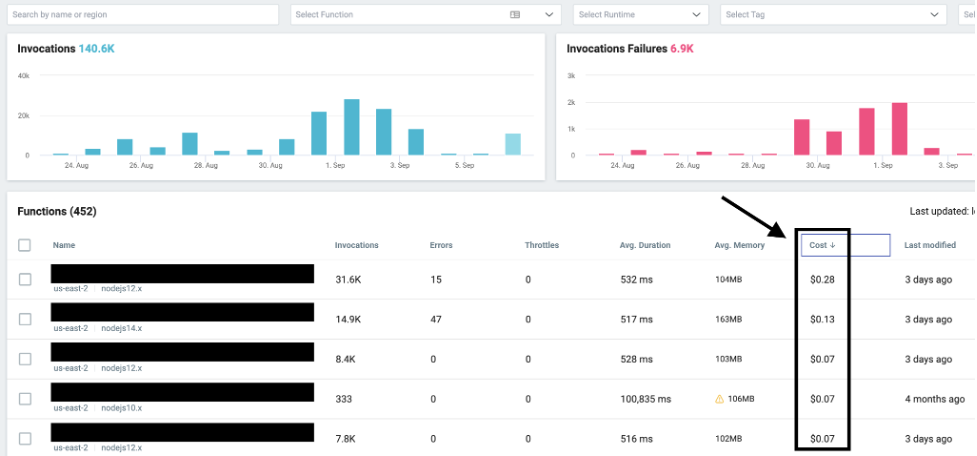

And the best thing about this new feature is that you can make a function-by-function decision on what architecture to use. And since Lambda functions are charged by the invocation, you can be smart about exactly when and where to optimize. Why waste your time optimizing a function if it runs twice a month and costs you fractions of a penny?

Lumigo takes the guesswork out of the equation and helps you make an informed decision as you can see at a glance the estimated cost of each function.

To get insight into your serverless application, go to https://platform.lumigo.io/auth/signup and sign up for a free account.