Yan Cui

Apr 07 2023

Lambda has a size limit of 6MB on request and response payloads for synchronous invocations. This affects API functions and how much data you are able to send and receive from a Lambda-backed API endpoint.

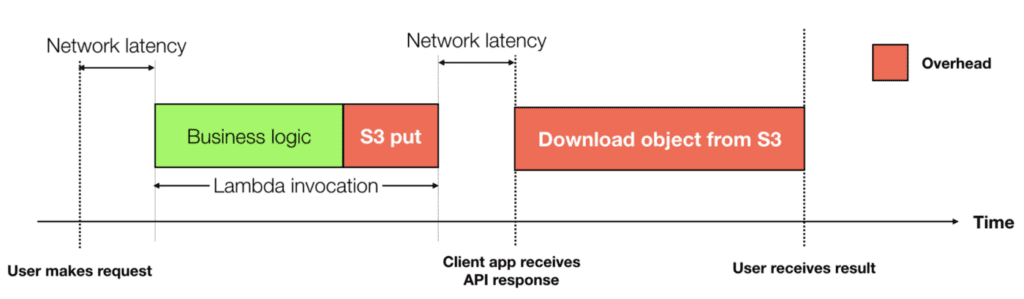

I have previously written about several workarounds on the request payload limit. But sometimes you also need to return a payload bigger than 6MB. For example, PDF or image files. The common workaround is to put the file on S3 and then return a presigned URL.

The downside of this workaround is that it complicates the client application and hurts the user experience. Putting the object on S3 adds user-facing latency. The client application also needs to download the object from S3 and incur even further latency overhead.

With today’s launch of the new Response Streaming feature, we are now able to return payloads larger than 6MB.

How it works

At the time of the launch, only the node14.x, node16.x and node18.x runtimes support this feature.

To make it work, you need to wrap your function code with the new streamifyResponse decorator like this:

const { Readable } = require('stream')

module.exports.handler = awslambda.streamifyResponse(

async (requestStream, responseStream, context) => {

const file = await downloadLargeFileFromS3()

const fileStream = Readable.from(file)

fileStream.pipe(responseStream)

await responseStream.finished()

}

)

The awslambda global variable here is something that Lambda service injects into the execution environment. You don’t need to import it yourself.

Notice that the function signature is now:

async (requestStream, responseStream, context) => …

instead of the normal

async (event, context) => …

The requestStream contains the stringified version of the invocation event. And the responseStream is a writable Stream object. Any bytes you write to the responseStream object would be streamed to the client.

The context object is the same as before.

To receive streamed response from a Lambda function, you can use the AWS SDK (JavaScript SDK v3, Go SDK or Java SDK v2). You can also enable Lambda Function URL (which we covered in depth here.) and use any HTTP client that support streamed responses.

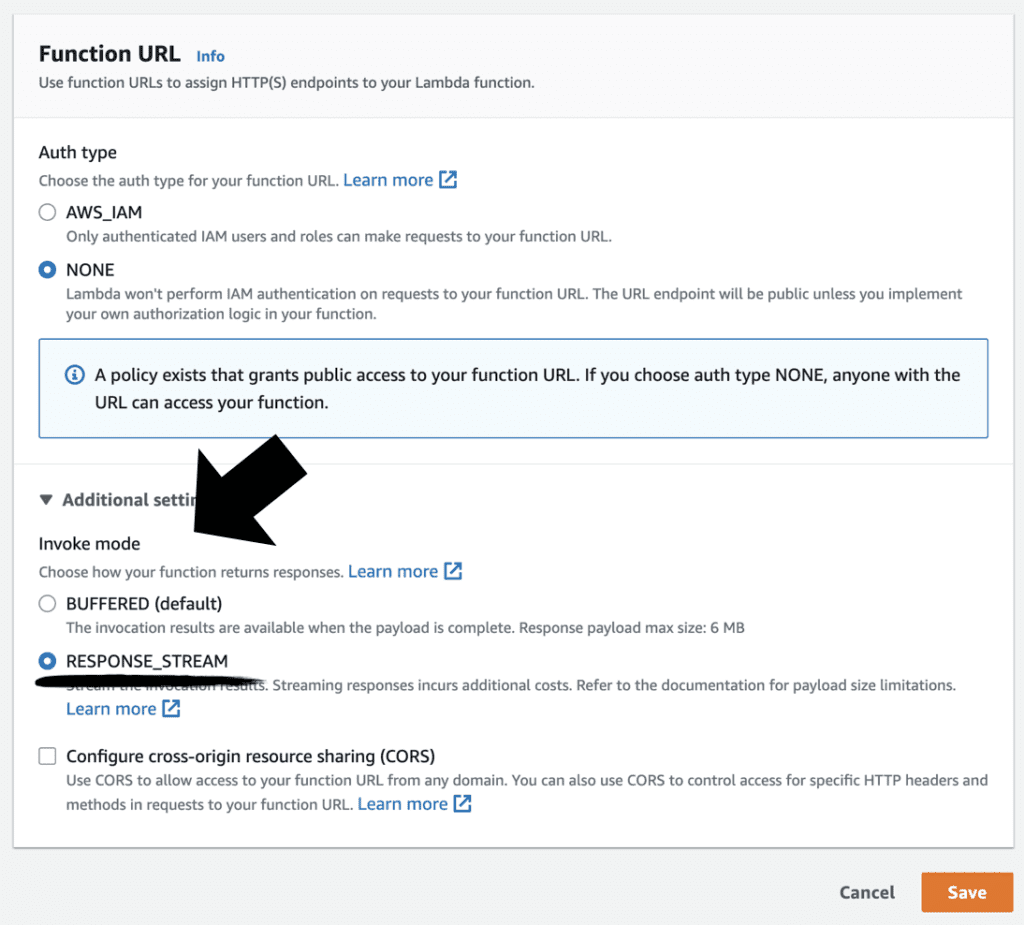

In most cases, you will be using Function URLs. You’d need to configure the Function URL to use the new RESPONSE_STREAM invocation mode. As noted in the description of this option, streaming responses incur additional costs. Please check the official Lambda pricing page to see the pricing model for streamed responses.

Use cases

The most obvious use case for this new feature is to bypass the need for S3 when you to return large objects from a Lambda-backed API. This helps improve the user experience and helps simplify the client application.

Being able to stream responses to the caller can also improve the time-to-first-byte (TTFB). This can be very helpful in some situations. For example, where you need to return video or audio content. Streaming multimedia content like this allows the client to show the content to the user sooner rather than having to wait for the whole content to be available.

You can also use this feature to stream incrementally updates for long-running tasks. As I explained in my coverage of Lambda Function URL (see here), one of the reasons for using Function URL over API Gateway is to bypass API Gateway’s 30s limit. Of course, you’re still limited by Lambda’s 15 minutes limit.

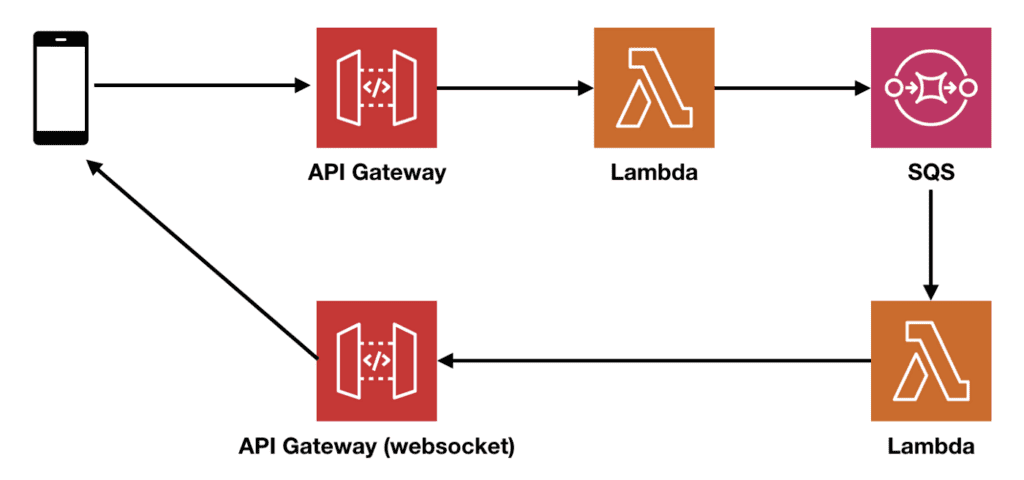

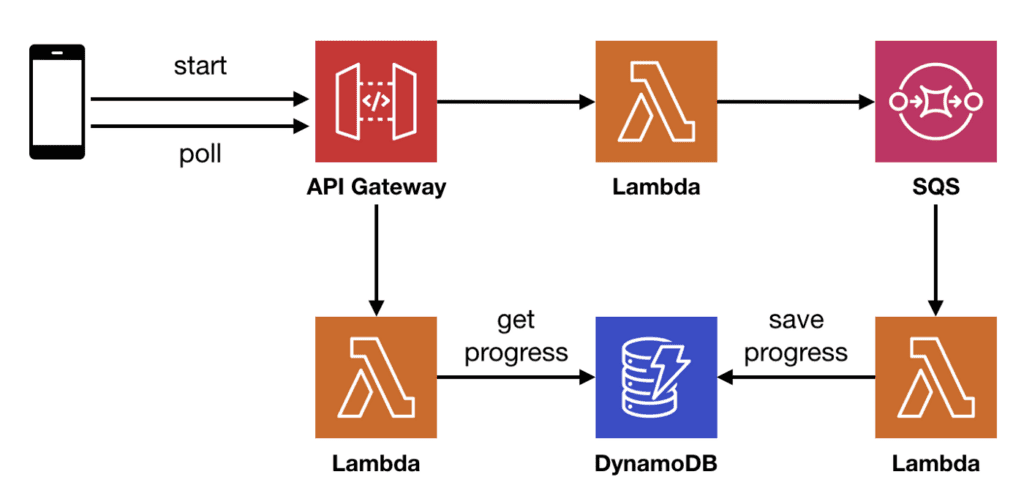

To improve the user experience for long-running tasks, many applications would include a progress bar of some sorts. However, sending progress updates from a long-running Lambda function is cumbersome. Often, it involves Web Sockets or some form of client-side polling.

Using API Gateway websockets to send progress updates from a long-running function.

Using client-side polling to send progress updates from a long-running function.

With streaming response, you can drastically simplify things. The invocation that handles the initial user request can stream periodic updates to the client and then send the full result when it’s ready.

Using streaming response to send progress updates from a long-running function.

These are just a few of the ideas that come to mind. Maybe it’s even possible to implement QUIC-style response streaming if you’re doing server-side rendering? Let us know what you think about this new feature and other use cases that we’ve missed.

Caveats

Payload size limit

There’s a default limit of 20MB. Fortunately this is a soft limit and can be raised via the Service Quota console or you can raise a support ticket.

Compatibility with API Gateway and ALB

Streamed responses are not supported by API Gateway’s LAMBDA_PROXY integration. You can use HTTP_PROXY integration between API Gateway and the Lambda Function URL but you will be limited by API Gateway’s 10MB response payload limit. Also, API Gateway doesn’t support chunked transfer encoding, so you lose the benefit of a faster time-to-first-byte.

Compatibility with ALB

ALB’s Lambda integration doesn’t support streamed responses.

Runtime support

Only node14.x, node16.x and node18.x runtimes are supported.