Sep 24 2019

There is a paradox at the heart of serverless. While it’s promoted as a very agile way to develop, a way to push your product as fast as possible to your customers, many development teams find it really difficult to work fast.

Why does this happen and can we solve the issue?

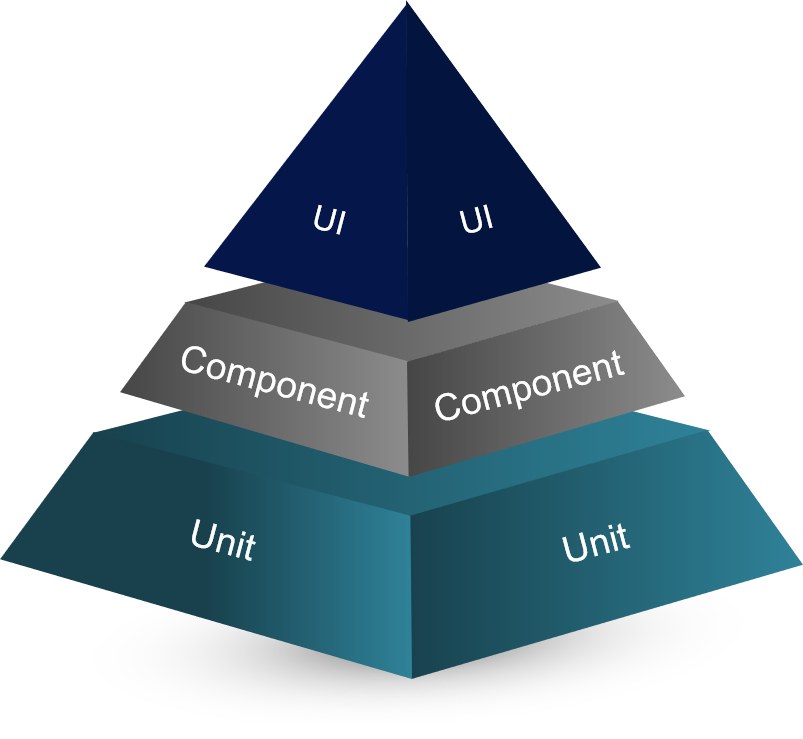

The three legs of serverless

Let’s start with what makes serverless such a powerful paradigm. Serverless rests on three legs, each of which contributes to the agility that serverless is known for.

1. Service provider

AWS and other cloud providers give you the ability to access any number of services, say for authentication or database, and everything just works out of the box. It substantially reduces the amount of work required for configuration, debugging or making sure your application scales well. Every developer knows how much goes into making sure the code they write is functional, but with serverless, the service provider massively reduces the amount of work you have to do as a developer.

2. Elasticity

The cloud provider is also able to give you the exact resources you need. For example, if you need a lot of storage because you’re serving videos, it’ll give you all the hard drives you need. If you need a lot of CPU power because you’re doing a lot of expensive calculations, it’ll give you as many CPUs as you need, with as much memory as you need. It also works the other way around. If you don’t need to store a lot of data or run CPU-intensive tasks, then you won’t get those resources.

3. Cost

The third leg is cost. This stands above the other two legs, meaning you’re paying only for what you’re using. Serverless gives you the power to take ideas very quickly from planning to production without paying too much money. You can iterate very fast and get feedback without paying for expensive servers for something that may or may not prove to be a long-term success. If the service proves to be successful then it’s very easy to scale up thanks to the elasticity provided by the cloud vendor.

So, why is serverless productivity a problem?

The development cycle always follows the same pattern. First we plan, then we write the code, then we build or compile it, and at the end we test it. It’s a circular motion that never really ends. After testing you find a bug, you rewrite the code, you build it again, and so on.

When a developer is working with Containers or locally on their own laptop, all they have to do is take a Docker for that web server or run it locally on their laptop. It just works out of the box and they can just test their code against that Docker. But in the world of serverless, the service provider doesn’t give us a docker that you can just run on your machine. You can’t just take a Docker of Athena and install it on your local machine.

The main reason that the serverless development cycle is slow is that there’s no getting around the fact that to really test your code properly it must be done in a cloud environment.

True, there are all kinds of unofficial mocks that mimic the behavior of many of the most common services, but there’s nothing that works exactly like the real thing.

That means that during development you have to take your code and push it to your cloud environment, and that means this entire cycle of development, instead of something that takes a few seconds can take a couple of minutes each time. It slows down your thinking process.

At Lumigo, we also started slowly and encountered these problems, but over time we’ve developed some nice tricks to improve our development speed, and our ability to debug and test code more quickly.

Local isn’t the answer, but it’s a start

Testing locally will never be as thorough as on the cloud. A lot of people ask why they can’t test locally using mocks. In the end it boils down to two main reasons.

The first is that while there are mocks for all the main AWS services, it’s very complicated to build a mock that works identically to those services. They have a lot of features and APIs, so making something identical, down to the exact API calls and parameters is difficult. Many mocks are not identical to their counterparts in the cloud.

The second issue is that many of the mocks are not officially supported. They are projects developers create in their spare time, so it’s understandable that there are often bugs and issues.

Despite those misgivings, you can absolutely start running tests locally. There are very good tools you can use locally to test basic flows, such as API Gateway calls to a Lambda. Then, as your flows become more complicated, you move testing to the cloud.

Unit Tests

This is a concept I’m sure all developers are familiar with. As much as 80% of your code can be covered by unit tests. So, the best approach is to write unit tests to use locally first, before turning to the cloud.

The problem is that are specific issues with running AWS Lambda unit testing: Lambda has a lot of limitations, such as disk space, memory, and running time. How do I mimic those behaviors on my machine? Also, Lambda doesn’t usually live in a void. It usually connects to one or two services. What if I have a very simple flow, a Lambda connecting to an API Gateway, how can I test that?

Serverless Offline

The Serverless Framework plugin, serverless-offline, gives you the ability to test the connectivity between a Lambda and an API Gateway, so you can test simple flows really easily.

Docker-lambda.

Docker-lambda gives you the ability to run your Lambda in a Docker that mimics completely the Lambda environment in AWS. You can use it locally on your machine to test various edge cases, as well as Lambda limitations such as memory and disk space.

Localstack

If you want to test against other services, like Kinesis, S3, or Athena, you can use localstack. It’s a powerful tool that combines a lot of mocks for various services into a single package.

In my opinion, if you get to the point of using Local Stack then it’s probably a good sign that your flow has become sufficiently complicated that you should be moving your testing to the cloud.

When local testing just won’t cut it

The moment you understand you need to start testing in the cloud environment, you’ll find that the single biggest bottleneck is the upload.

When you want to start testing on the cloud, you need to upload the file with the Lambda and the various resources to the AWS environment, and AWS will immediately start recreating that environment.

This part takes a lot of time, so here are 8 tips for accelerating this process.

1. Upload your environment ahead of time

One of the slowest parts in creating your environment is the resource creation, your DynamoDB, S3 buckets, and so on. It takes time but it only happens once. You know which resources your code is going to use. Provision those resources to the cloud as soon as possible, even before you’ve written the code. That way as soon as you’ve finished the code you can immediately start testing it in the environment without waiting for AWS to provision it. Another thing we do at Lumigo is that we have a lot of serverless services, we have noticed that many times we can initialize resources in parallel, it allows us to run the provision of the environment very fast.

2. Trim your package

Any libraries you don’t need should be removed. Make sure to choose the right languages. Java, for example, produces very large packages, taking up dozens of megabytes, so it takes a long time to upload. If you’re doing a lot of machine learning coding, there are many layers that package many known machine learning libraries, which you can use in your code without uploading all the time.

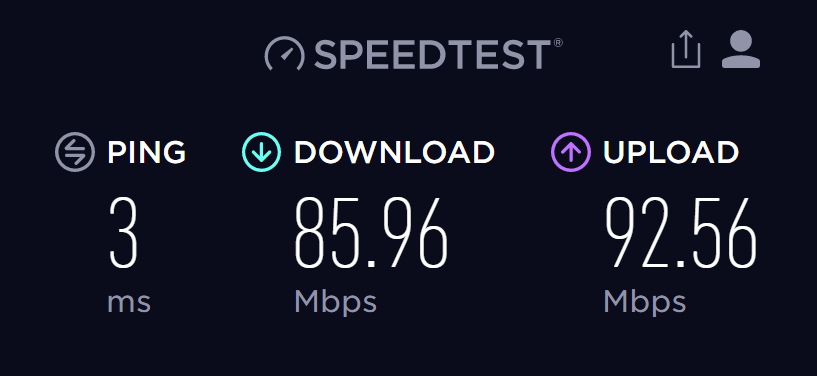

3. Pay for the fastest uploads possible

In serverless, getting fast upload speeds from your ISP is very important, and something that’s absolutely worth spending money on. It may sound simple but this is probably the most important piece of advice here.

4. Debug in the cloud

The AWS Lambda console enables you to do quick changes in your code. For example, If you want to add a debug message, you don’t have to go through the whole process of writing it locally, then reuploading to the cloud and waiting for another minute for everything to sync, instead, you can just make the change in the console and save it to your environment. But remember: use it to debug, don’t use it in production!

5. Deploy only your changes

Serverless Framework gives you the ability to deploy only specific functions. So, if you’re building a service with many functions, but you only need to make changes to one function, deploy only that function.

6. Use S3 transfer acceleration

This special feature of CloudFront Edge speeds up the entire upload process. There are nearly 200 CloudFront Edge points-of-presence, which means there is usually one very close to your location, so it can really speed up the upload. AWS has also released a special service for serverless called Lambda@Edge.

7. Provide each developer their own development environment

That way developers don’t interfere with each other’s work. If one developer makes a change, it doesn’t interfere with somebody else’s work.

8. Always use unit testing

Wherever possible, try to test using a local toolset. It will almost always be the fastest way to test and debug your code. Learn more in our guide about Lambda unit testing.

Summary

So, there you have it, eight tips that taken together can really accelerate your development flow and help your team to get the most out of this powerful serverless paradigm.

With serverless, there’s no getting away from the fact that you need to work in the real cloud environment to ensure your code functions exactly as it should. But that doesn’t mean there’s no room for local testing. On the contrary, always start by testing as much as possible locally, and then, when your flow is sufficiently complicated, turn to the cloud. It may take longer, but with the tips above you’ll be able to reach a point where the speed of serverless really starts to show.

With new technology like serverless it’s important that we work together to build a knowledge base, so please do get in touch on Twitter with your tips and working methods. We’d love to hear from you!